It may be the only presidential executive order ever inspired by Tom Cruise.

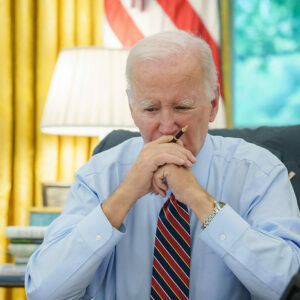

Earlier this year at Camp David, President Joe Biden settled down to watch the latest “Mission Impossible” movie installment. In the film, Cruise and his IMF team face off against a rogue, sentient artificial intelligence (AI) that threatens all of humanity. The movie reportedly left the president shaken.

“If he hadn’t already been concerned about what could go wrong with AI before that movie, he saw plenty more to worry about,” said White House Deputy Chief of Staff Bruce Reed.

Reed said Biden is “impressed and alarmed” by what he has seen from AI. “He saw fake AI images of himself, of his dog… he’s seen and heard the incredible and terrifying technology of voice cloning, which can take three seconds of your voice and turn it into an entire fake conversation.”

Given that the cutting-edge communications technology when Biden was born was black-and-white television, it’s no surprise he is awed by the power of AI. His order adds additional layers of government regulation to this quickly developing, cutting-edge tech.

For example, Biden will “require that developers of the most powerful AI systems share their safety test results and other critical information with the U.S. government.” It also instructs federal agencies to establish guidelines “for developing and deploying safe, secure, and trustworthy AI systems.” And companies doing that cutting-edge work that can keep the U.S. competitive with China are required to notify the federal government when they are training their models and share the results of “red-team safety tests.”

According to Reason Magazine’s science reporter Ronald Bailey, “Red-teaming is the practice of creating adversarial squads of hackers to attack AI systems with the goal of uncovering weaknesses, biases, and security flaws. As it happens, the leading AI tech companies— OpenAI, Google, Meta—have been red-teaming their models all along.”

Tech experts say that movies from the multiplex aren’t necessarily the best sources for setting public policy.

“I just don’t understand why that’s where people’s heads go,” Shane Tews, a cybersecurity Nonresident Senior Fellow at the American Enterprise Institute, said. “And I do think it really is because they don’t have a lot of examples to replace that ‘Terminator’ feeling.

“I think as we start to understand how to have a better human-machine relationship, that it’ll work better,” Tews added. “I’m not sure that people will necessarily get it, but people are kind of in a crazy moment in their heads right now.”

The White House has embraced the crazy, critics say, spreading fear about existential threats.

“When people around the world cannot discern fact from fiction because of a flood of AI-enabled mis- and disinformation, I ask, is that not existential for democracy?” said Vice President Kamala Harris during a speech at the United Kingdom’s AI Safety Summit. In her mind, a variety of issues need to be addressed to manage the growing AI industry. “We must consider and address the full spectrum of AI risk — threats to humanity as a whole, as well as threats to individuals, communities, to our institutions, and to our most vulnerable populations.”

Biden’s proposal was praised by the Electronic Frontier Foundation. The digital rights nonprofit supports regulating the use of AI in certain situations, like housing, but not the technology itself.

“AI has extraordinary potential to change lives, for good and ill,” Karen Gullo, an EFF analyst, said. “We’re glad to see the White House calling out algorithmic discrimination in housing, criminal justice, policing, the workplace, and other areas where bias in AI promotes inequity in outcomes. We’re also glad to see that the executive order calls for strengthening privacy-preserving technologies and cryptographic tools. The order is full of ‘guidance’ and ‘best practices,’ so only time will tell how it’s implemented.”

Other technology policy analysts, like Adam Thierer, panned the executive order.

“Biden’s EO is a blueprint for back door computing regulation that could stifle America’s technology base,” said Thierer, a resident senior fellow, Technology & Innovation, for R Street Institute. “It will accelerate bureaucratic micro-management of crucial technologies that we need to be promoting, not punishing, as we begin a global race with China and other nations for supremacy in next-generation information and computational capabilities.”

He has argued people shouldn’t be pessimistic about new technology or attempt to go into what he calls “technological stasis.”

And Doug Kelly, CEO of the American Edge Project, said the temptation of Washington, D.C., to overregulate could cost the U.S. economy.

“A report by PwC estimates the global economic effect of AI to be $14 trillion by 2030, with China and North America projected to be the biggest winners in terms of economic gain,” Kelly wrote for DCJournal. “But if regulators in Washington or Europe tie the hands of AI innovators, we run the risk of falling behind in AI leadership to China, which has invested hundreds of billions in an attempt to gain global AI superiority.”

AI is already used in autocorrect, autocomplete, chatbots, translation software, and programmable things like robot vacuums or automatic coffee makers. Popular sci-fi characters like C-3PO, R2D2, and JARVIS are also AI-driven.

Tews suggests people get frustrated when technology doesn’t work as it should. “I think people project that onto what happens when I get into these situations where it’s me and this machine that’s not acting appropriately – I’m not getting the net result of what I want, and I don’t want there to be more of that my life.”